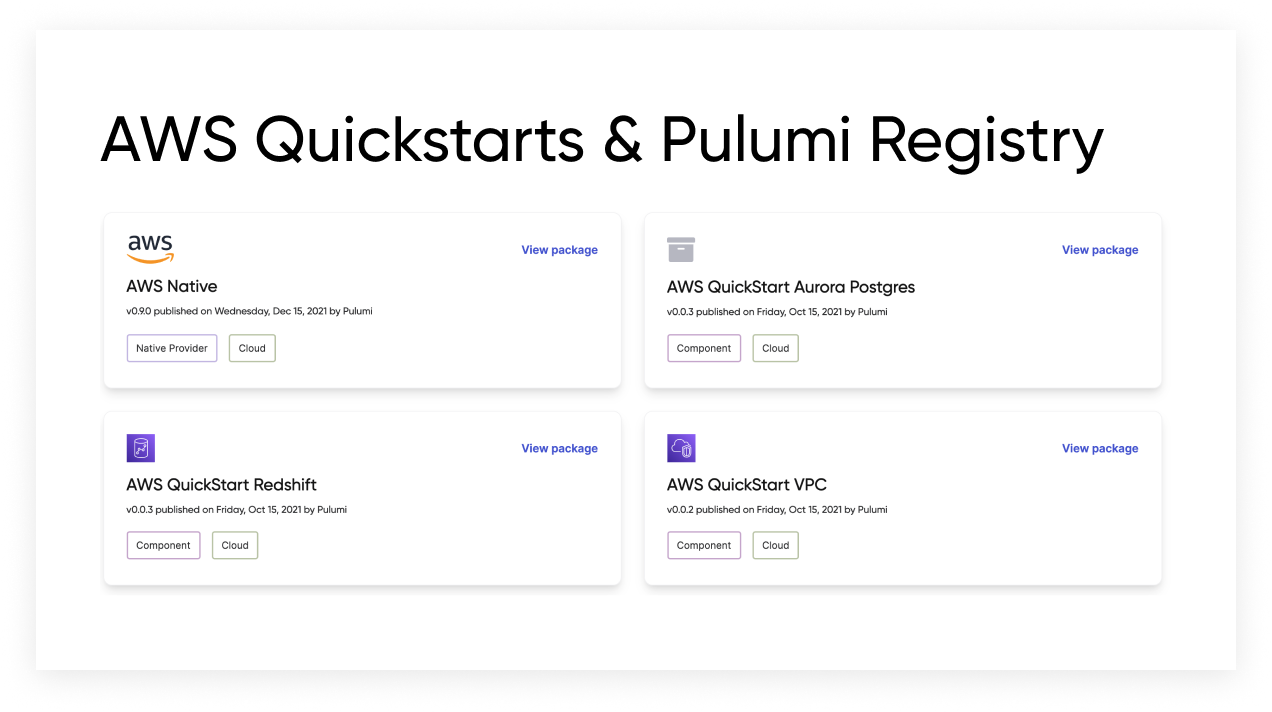

Building an ETL pipeline with Amazon Redshift and AWS Glue

In our last episode, Deploying a Data Warehouse with Pulumi and Amazon Redshift, we covered using Pulumi to load unstructured data from Amazon S3 into an Amazon Redshift cluster. That went well, but you may recall that at the end of that post, we were left with a few unanswered questions: How do we avoid importing and processing the same data twice? How can we transform the data during the ingestion process?