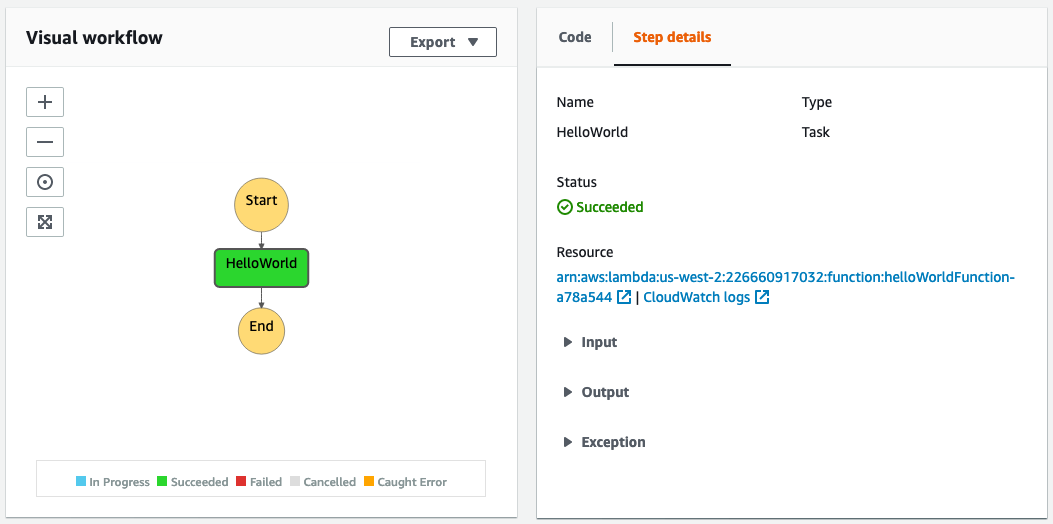

How Webiny Built a Serverless Application Framework

Building an open-source framework for building serverless applications has many challenges, one of which is deploying cloud infrastructure resources. In this article, learn how Webiny uses Pulumi to enable its users to easily deploy and develop applications built on top of serverless cloud technologies.