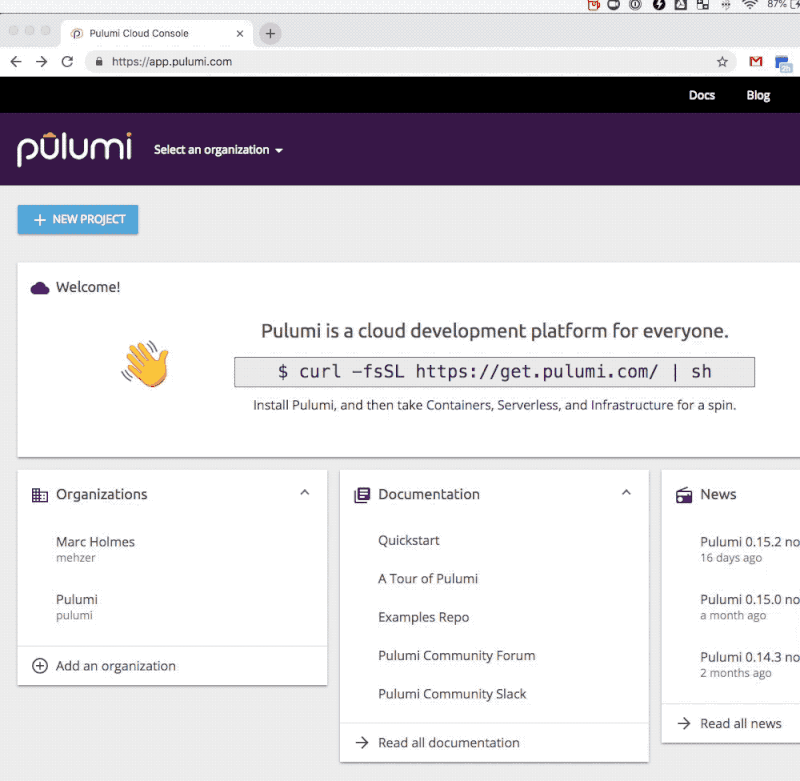

This guest post is from Simon Zelazny of

Wallaroo Labs.

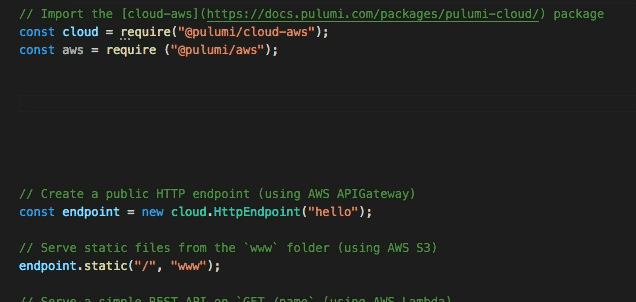

Find out how Wallaroo powered their cluster provisioning with Pulumi,

for data science on demand.

Last month, we took a

long-running pandas classifier

and made it run faster by leveraging Wallaroo’s parallelization

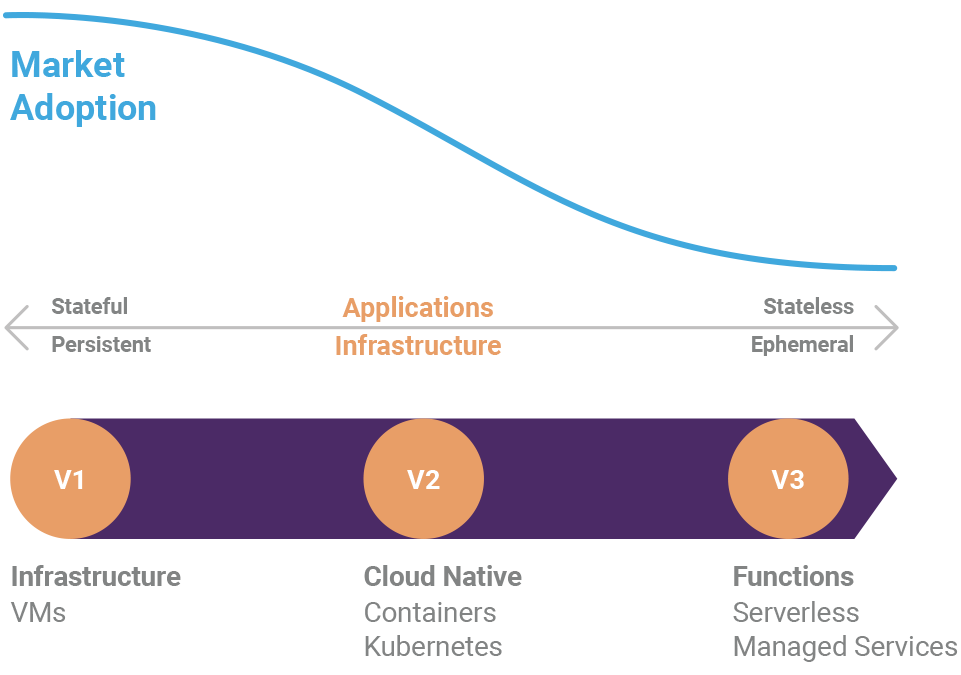

capabilities. This time around, we’d like to kick it up a notch and see

if we can keep scaling out to meet higher demand. We’d also like to be

as economical as possible: provision infrastructure as needed and

de-provision it when we’re done processing.

If you don’t feel like reading the post linked above, here’s a short

summary of the situation: there’s a batch job that you’re running every

hour, on the hour. This job receives a CSV file and classifies each row

of the file, using a Pandas-based algorithm. The run-time of the job is

starting to near the one-hour mark, and there’s concern that the

pipeline will break down once the input data grows past a particular

point.

In the blog post, we show how to split up the input data into smaller

dataframes, and distribute them among workers in an ad-hoc Wallaroo

cluster, running on one physical machine. Parallelizing the work in this

manner buys us a lot of time, and the batch job can continue processing

increasing amounts of data.

Read more →