Getting to ChatOps with Pulumi Webhooks

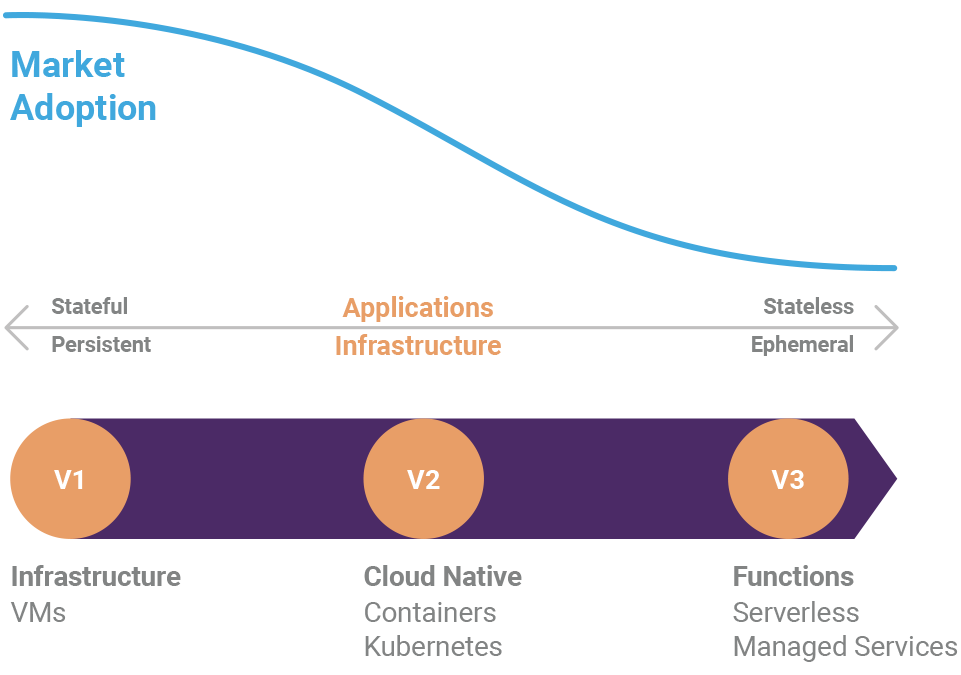

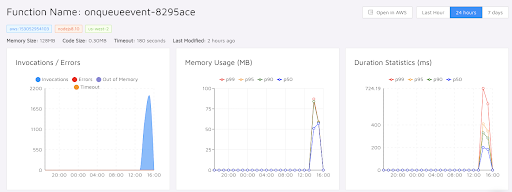

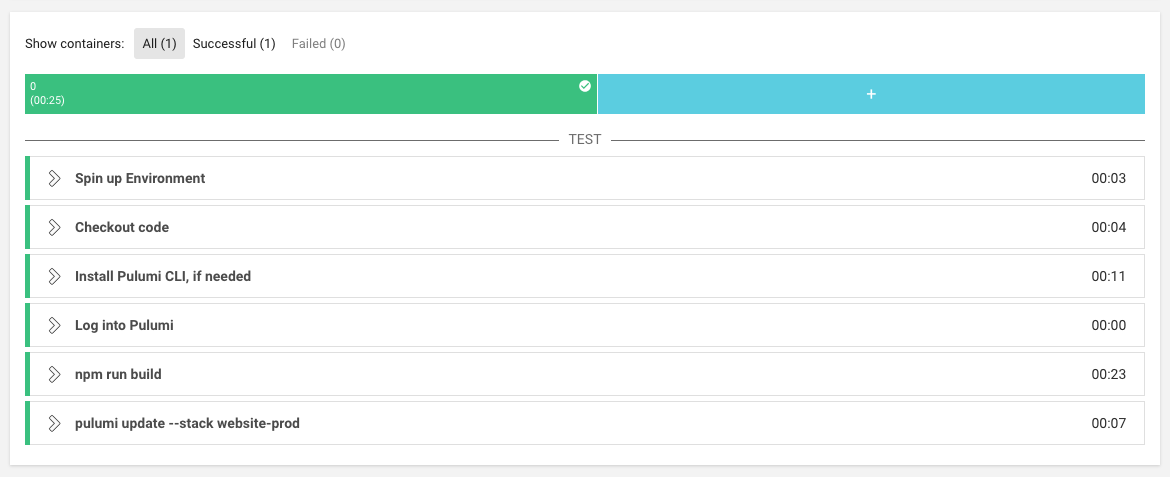

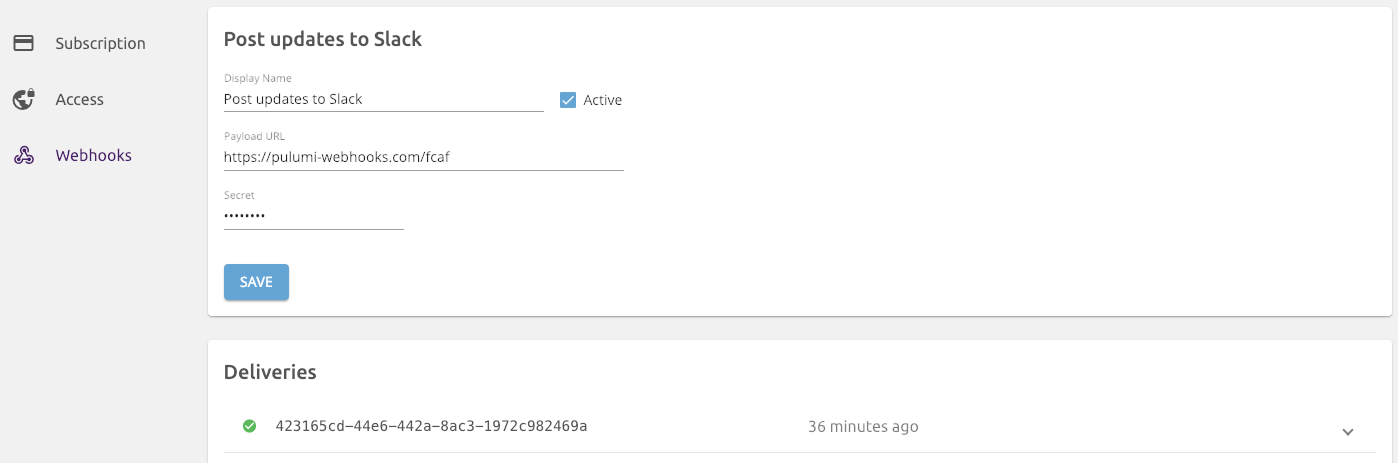

Today we are delighted to announce the availability of Webhooks on Pulumi. Webhooks are a very common mechanism to enable teams to be notified or react to events. In Pulumi’s case, this means: notifications of infrastructure changes (be it on Kubernetes, AWS, or any other cloud); responding to those changes as part of ‘ChatOps’; or other build pipelines, to improve the delivery of cloud native infrastructure.

Pulumi Webhooks are available for the Team and Enterprise editions of Pulumi. If you’re keen to try them out, start a trial of Team Edition here.